Reference-Limited Compositional Zero-Shot Learning

Published in Proceedings of the 2023 ACM International Conference on Multimedia Retrieval (ICMR 2023)

arXiv github video (Google Drive) slide

Introduction

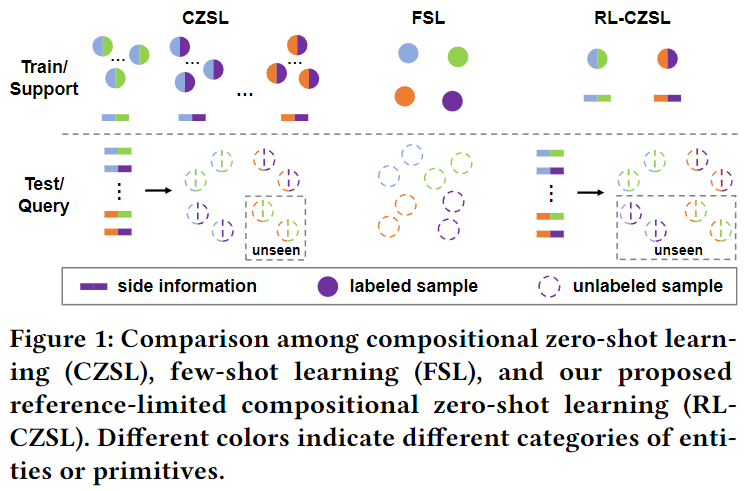

Compositional zero-shot learning (CZSL) refers to recognizing unseen compositions of known visual primitives, which is an essential ability for artificial intelligence systems to learn and understand the world. While considerable progress has been made on existing benchmarks, we suspect whether popular CZSL methods can address the challenges of few-shot and few referential compositions, which is common when learning in real-world unseen environments. In this paper, our contributions are:

- We introduce a new problem named reference-limited compositional zero-shot learning (RL-CZSL), where given only a few samples of limited compositions, the model is required to generalize to recognize unseen compositions. This offers a more realistic and challenging environment for evaluating compositional learners.

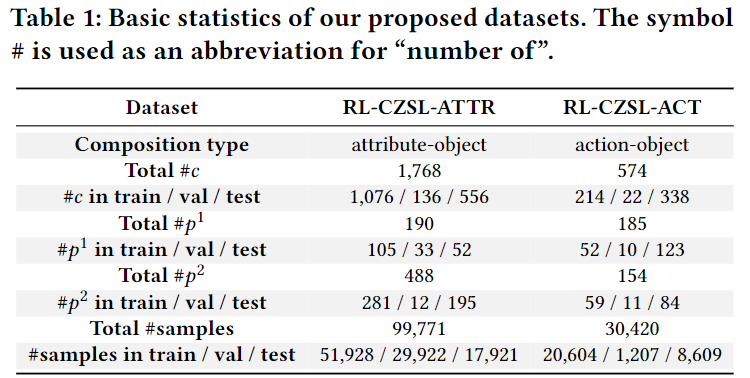

- We establish two benchmark datasets with diverse compositional labels and well-designed data splits, providing the required platform for systematically assessing progress on the task.

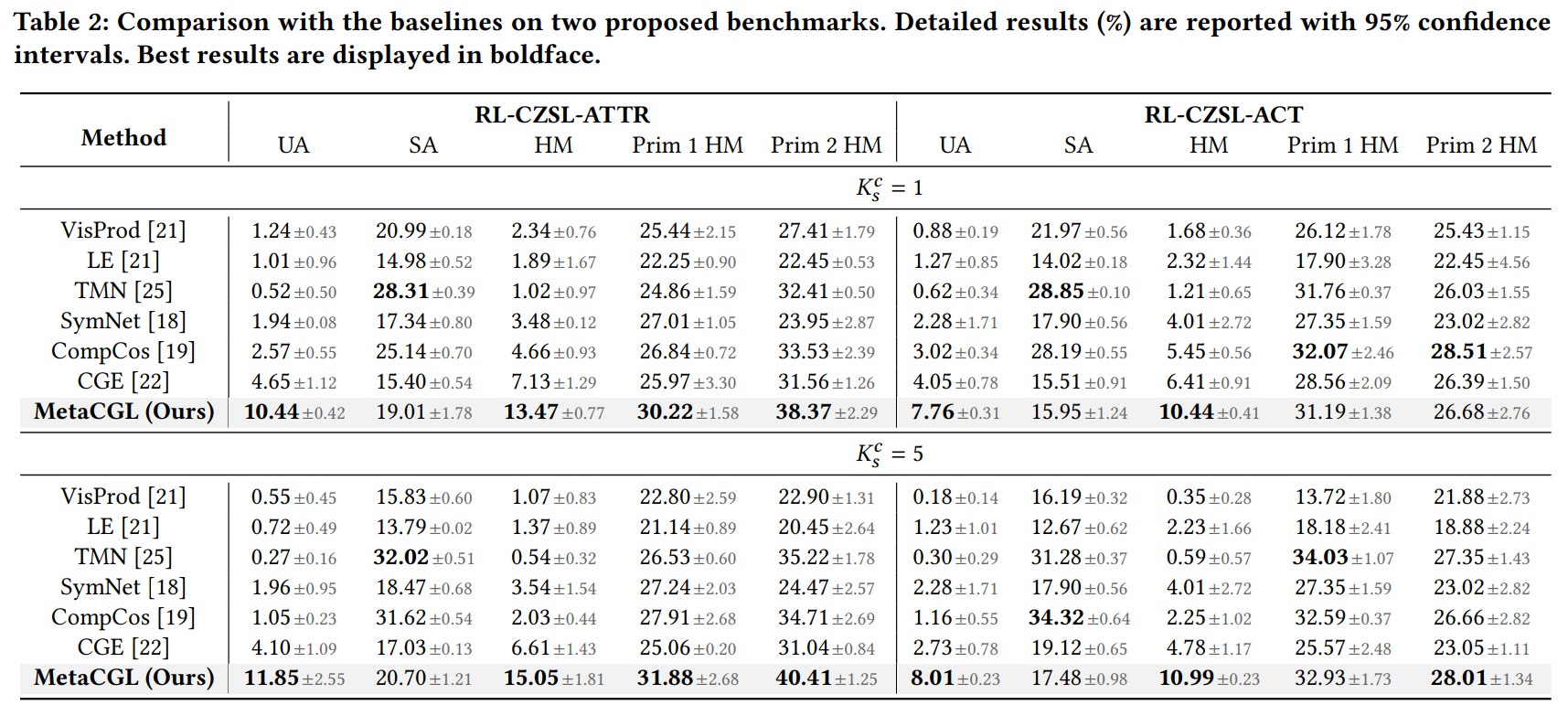

- We propose a novel method, Meta Compositional Graph Learner (MetaCGL), for the challenging RL-CZSL problem. Experimental results show that MetaCGL consistently outperforms popular baselines on recognizing unseen compositions.

BibTex

If you find this work useful in your research, please cite our paper:

@inproceedings{Huang2023RLCZSL,

title={Reference-Limited Compositional Zero-Shot Learning},

author={Siteng Huang and Qiyao Wei and Donglin Wang},

booktitle = {Proceedings of the 2023 ACM International Conference on Multimedia Retrieval},

month = {June},

year = {2023}

}